DebugLLM

Design for increased accuracy in LLM-based Program Repair

Summer 2024, Heidelberg

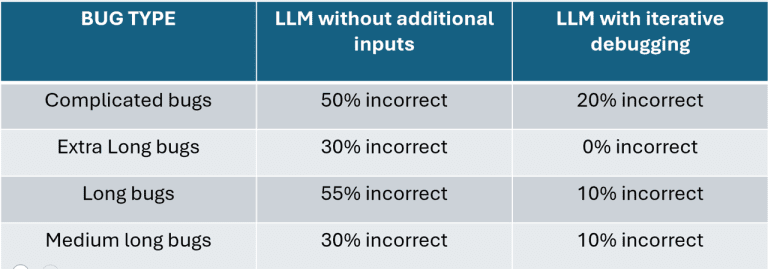

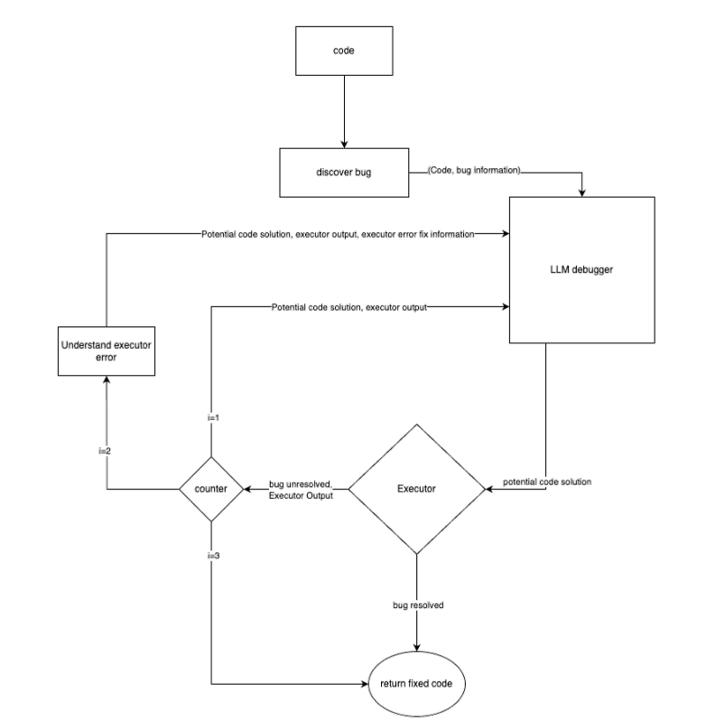

Task: Exploit LLMs for code debugging and repair based on existing research work. Use LLM-meta-strategies and feedback from runtime data

Project Objectives

- Find, study and summarize related research papers

- Create a primitive routine for fixing bugs.

- Build a metric-system for evaluating performance

- Identify and implement blocks from scientific papers

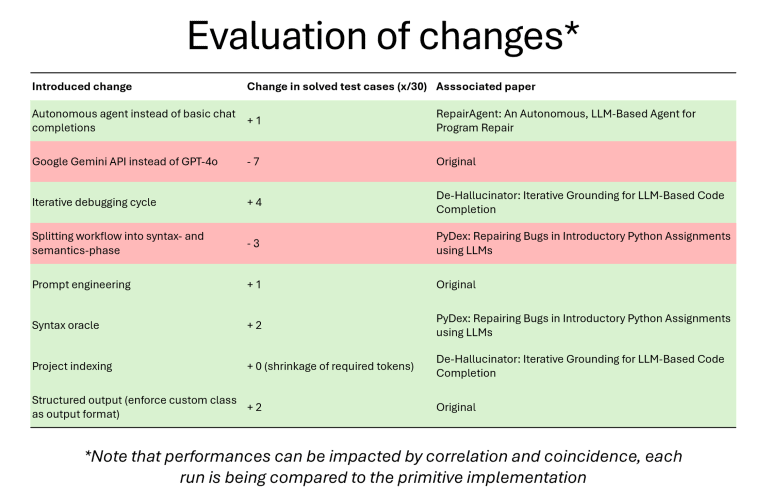

- Compare performances for single-changes

- Keep best workflow-change

- Reach reasonable performance (>50% of everyday bugs, beat basic initial model prompting)

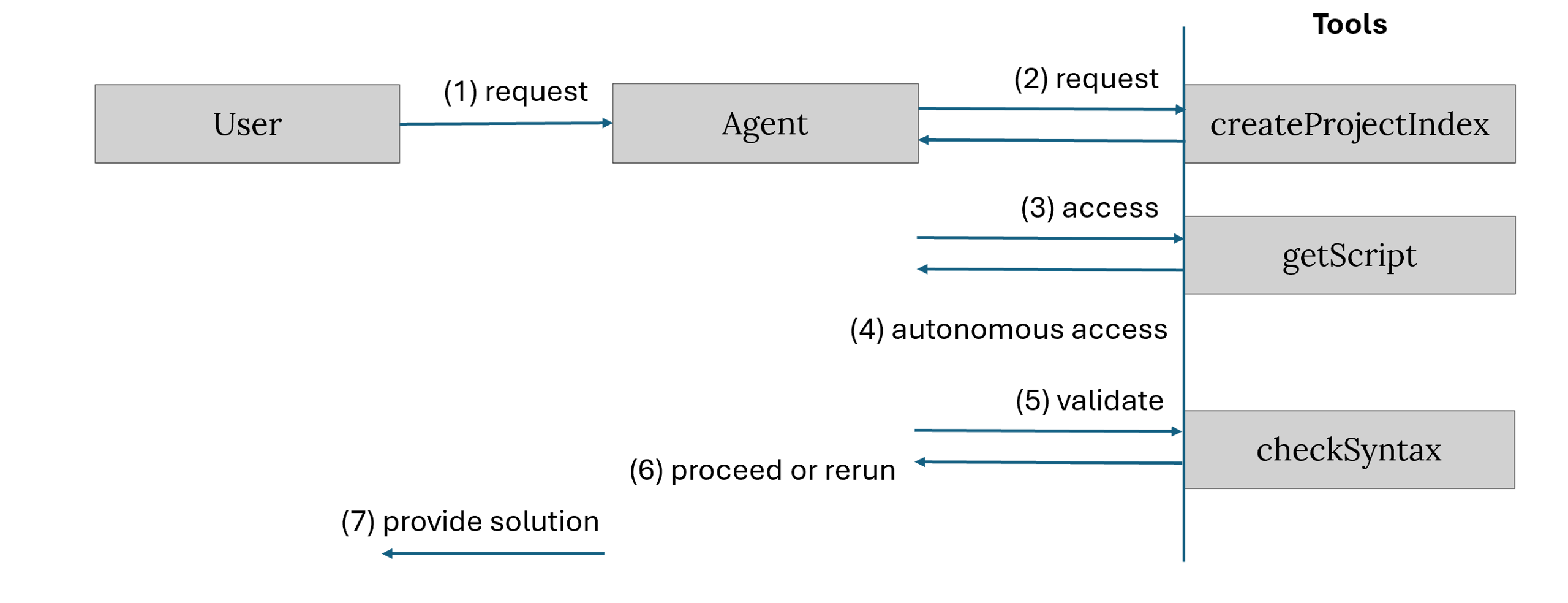

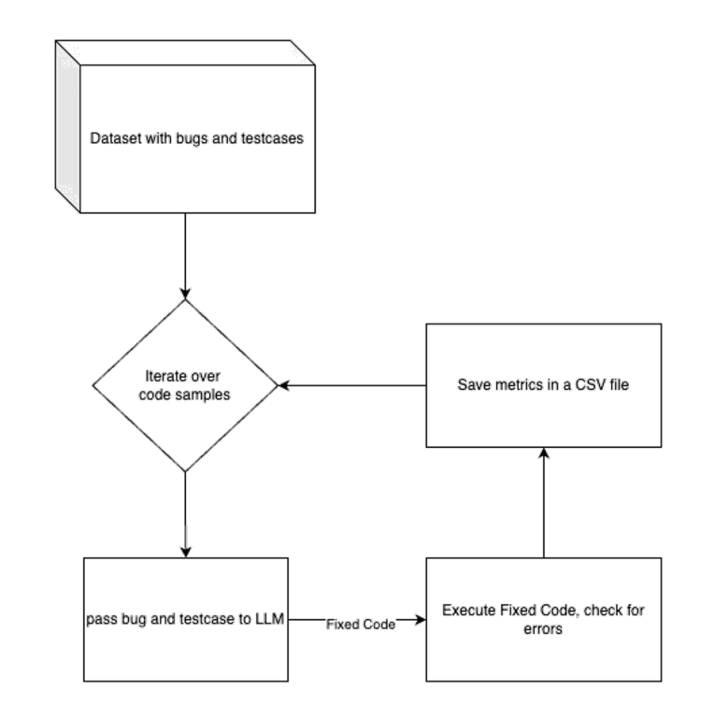

Architectural Design

The following steps are inspired by specific research papers:

(2): Pre-static analysis: De-Hallucinator: Iterative Grounding for LLM-Based Code Completion

(2)-(4): LLM-agent – middleware – tools: RepairAgent: An Autonomous, LLM-Based Agent for Program Repair

(5) Syntax oracle: PyDex: Repairing Bugs in Introductory Python Assignments using LLMs

(6) Iterative prompt augmenting: De-Hallucinator: Iterative Grounding for LLM-Based Code Completion

Evaluation